AI is changing the world around us—from how businesses operate to the products we use every day. But as companies deploy AI to optimize everything from customer experiences to operational workflows, they face a critical dilemma: How do we push the boundaries of innovation without losing sight of our ethical responsibilities?

The balance between AI-driven innovation and the moral obligations that come with it is delicate. On one hand, businesses are eager to capitalize on AI’s transformative potential. On the other hand, they must navigate issues like data privacy, algorithms bias, and accountability in decision-making. If they fail to strike this balance it can lead to unintended consequences, from damaged reputations to regulatory crackdowns.

As we embrace AI’s incredible opportunities, we also need to ensure that we’re building systems that people can trust. Fostering trust and achieving long-term success means integrating ethics into the very DNA of AI development.

In this blog, we’ll explore how organizations can innovate responsibly, adopt ethical frameworks, and lead the way in building AI technologies that are both transformative and trustworthy. More importantly, why responsible AI development is not a compliance issue but a business need in the race for sustainable innovation.

Prioritizing Ethical Considerations in AI Innovations

Providing higher efficiency, automation, and cost savings, the integration of AI into business processes and products can revolutionize industries. However, without careful consideration, AI can also exacerbate existing biases and inequalities. Organizations must proactively ensure AI innovations prioritize ethical considerations from the outset. It involves embedding ethical guidelines into the development process, from data collection to model deployment.

Take algorithmic bias, for example. AI models are trained on vast datasets, which may contain historical biases reflecting societal inequalities. If these biases are not mitigated, AI systems can perpetuate or even amplify them, affecting everything from hiring decisions to healthcare access. To counter this, organizations should implement rigorous testing and validation processes to identify biases and refine models accordingly. Regular audits and updates are also essential to ensure that AI systems remain fair and equitable as they evolve.

Another critical issue is privacy. AI thrives on data, and there’s a fine line between delivering personalized experiences and infringing on individual rights. Organizations must prioritize transparency and consent to ensure data is used responsibly.

Maintaining Ethical AI While Fostering Innovation

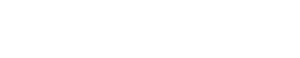

Balancing the drive for innovation with a commitment to ethical AI is a challenging, yet essential, task for companies operating in the digital age. This requires a culture of ethical innovation, where teams are encouraged to consider the broader impact of their work, so that technological advancement goes hand-in-hand with accountability and foresight.

One of the most effective ways to ensure this balance is by embedding ethics directly into the innovation process. Thicos means bringing ethicists, legal experts, and a diverse range of stakeholders into the conversation from the outset. Involving voices from different backgrounds and disciplines allows companies to spot potential ethical dilemmas early, whether it’s the risk of bias, privacy violations, or unintended consequences. Proactively addressing these concerns during development, rather than after deployment, prevents costly issues down the road and strengthens the foundation of AI systems.

Another strategy is to invest in AI research that prioritizes ethical considerations. It includes exploring new methods for reducing bias, enhancing transparency, and improving the fairness of AI systems. By committing resources to understanding how to make AI more equitable and reliable, businesses can position themselves as industry leaders in ethical innovation.

Microsoft, for example, has integrated ethical considerations into its AI development process, ensuring that innovation does not come at the cost of ethical responsibility.

Frameworks and Guidelines for Responsible AI

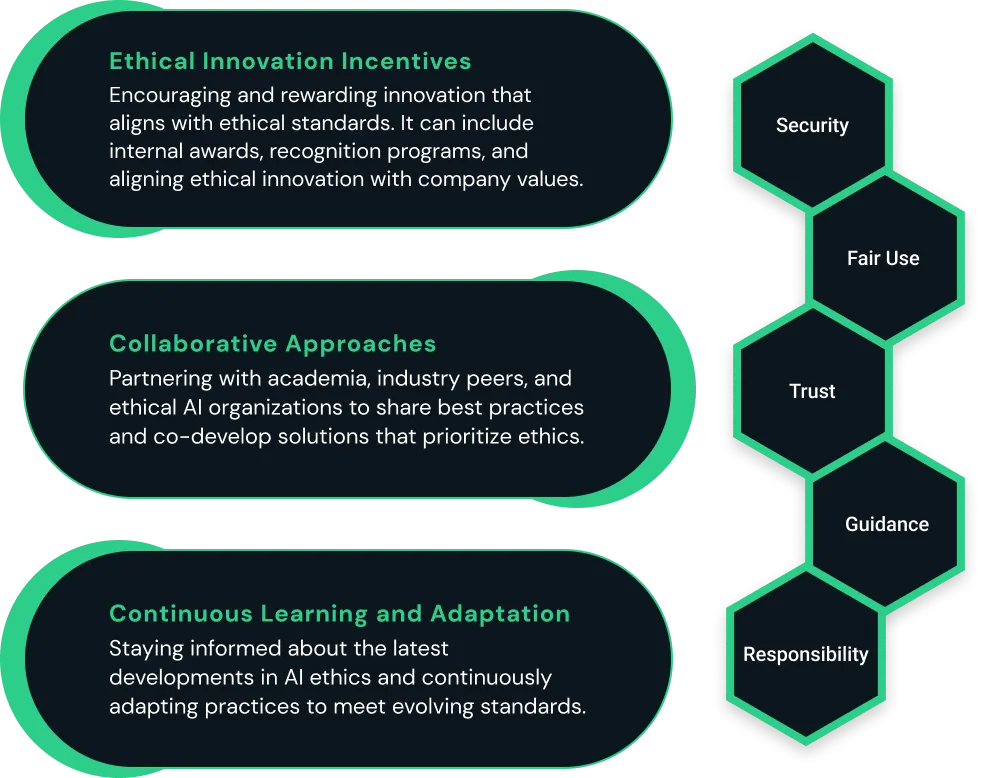

To balance the rapid advancement of AI technologies with the need for responsible usage, organizations can rely on various frameworks and guidelines designed to guide AI development. These frameworks ensure that innovation doesn’t come at the cost of ethics. Among the most recognized is the AI Ethics Guidelines developed by the European Commission’s High-Level Expert Group on AI. These guidelines emphasize core principles such as transparency, accountability, and human-centricity.

- Transparency

To build trust in AI systems, transparency must be a priority. Organizations should ensure that AI processes are explainable and that stakeholders understand how decisions are made. It can be achieved through the development of interpretable models and the clear documentation of AI workflows.

This could mean something as simple as showing which data points were most influential in determining a loan approval or why a particular treatment was suggested in healthcare. Additionally, organizations should be transparent about the data sources used and the potential limitations of their AI system. No AI is perfect, and understanding the boundaries of its capabilities, whether due to biased data or limitations in training, helps set realistic expectations. - Accountability

One of the greatest risks with AI is its ability to make autonomous decisions, sometimes without sufficient oversight. AI’s decisions can have far-reaching consequences in critical sectors like healthcare, finance, and legal systems. It makes accountability another key aspect of ethical AI. Organizations should establish clear lines of responsibility for AI outcomes, ensuring that there is a human-in-the-loop to oversee critical decisions.

Organizations should establish clear protocols for AI governance, ensuring there is someone accountable at each stage of the AI lifecycle, from development to deployment and post-deployment monitoring. This is particularly important in high-stakes applications, such as finance or healthcare, where AI-driven decisions can have significant consequences. - Human-Centricity

AI should work for people, not the other way around. The principle of human-centricity focuses on ensuring that AI technologies are designed with the end-user’s well-being in mind. In practice, this means prioritizing privacy, autonomy, and fairness in every aspect of AI development. For example, AI-driven hiring tools must be designed to minimize bias and ensure fair treatment for all candidates.

For instance, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems provides comprehensive guidelines to help organizations navigate the ethical challenges of AI development.

The Role of Government and Regulatory Bodies

While the onus is on organizations to embed ethical principles into their AI practices, government and regulatory bodies have a big role to play in shaping and enforcing these standards. These entities are tasked with establishing the legal and ethical standards that guide AI development and deployment ensuring that emerging technologies align with societal values and protect individuals and communities.

Regulatory bodies can promote ethical AI by setting clear guidelines and standards for AI development. These standards should be flexible enough to accommodate innovation while providing a robust framework for ethical considerations. Governments can also support ethical AI by funding research into responsible AI practices and by encouraging collaboration between industry, academia, and civil society.

In addition to setting standards, regulatory bodies must also enforce them. It involves monitoring AI systems for compliance and taking action when ethical breaches occur. By holding organizations accountable, regulators ensure that AI technologies are developed and used in ways that ultimately benefit society as a whole.

Ethical AI for Startups vs. Large Enterprises / How is it Different for Startups and Large Enterprises?

Balancing rapid innovation with ethical responsibility varies between startups and established companies. Startups thrive on flexibility and risk-taking, often embracing bold new ideas but facing challenges in resources and expertise for ethical practices. Their agile nature can drive innovation but may also lead to ethical oversights if not carefully managed.

In contrast, established companies benefit from greater resources and structured processes, allowing for more formalized ethical practices and compliance. Their larger scale enables them to implement comprehensive frameworks and engage in long-term ethical planning, though this can sometimes slow the pace of innovation.

Both types of organizations face unique pressures and opportunities. Startups can leverage their agility to integrate ethical considerations from the ground up, while established companies can build on their extensive experience to refine and uphold ethical standards. Ethical AI is not a one-size-fits-all, but a dynamic balance tailored to each organization’s approach and capacity.

Learn More About AI Implementation

*Contact our experts for a FREE consultation about how you can achieve the maximum potential of your organization’s data by leveraging Celestial’s end-to-end data engineering and production AI solutions.